Recording reality with 360 Stereographic VR Video

I first started shooting 360 still photography using an SLR film camera with a special pano head and stitching still frame QTVR (Quicktime VR) movies from scanned photographs. A single QTVR pano was a significant undertaking and most hardware at that time was incapable of even full screen playback. I shot my first 360 video for a pitch demo in 2013:

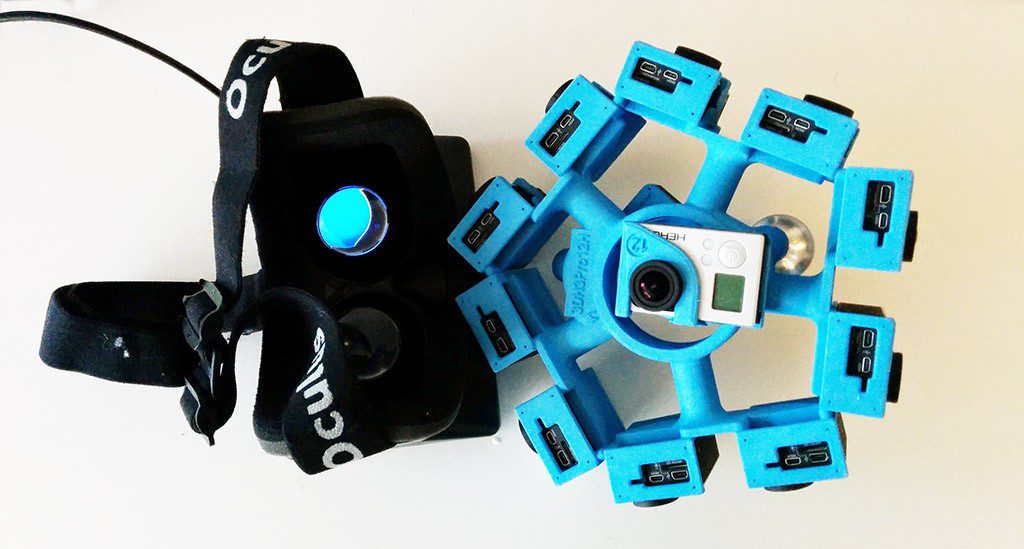

The fact that this was even possible, never-mind that it was playing on a gyroscopically controlled mobile device, was just unimaginable to me only a few years prior. Now 12 months later I’ve been experimenting with shooting 360 video in 3D for virtual reality headsets. The concept has not changed, it just now involves shooting 400 panos per second across 14 cameras.

I shoot monoscopic 360 video using a six camera go pro rig, but this only goes so far in VR. It’s possible to feed a single 360 video duplicated for both the left and right eye but the effect is a very flat experience. Aside from the head tracking it is little different than waving around an iPad. To shoot 360 in stereo I needed a way to shoot two 360 videos 60mm apart (average IPD) simultaneously.

I shoot monoscopic 360 video using a six camera go pro rig, but this only goes so far in VR. It’s possible to feed a single 360 video duplicated for both the left and right eye but the effect is a very flat experience. Aside from the head tracking it is little different than waving around an iPad. To shoot 360 in stereo I needed a way to shoot two 360 videos 60mm apart (average IPD) simultaneously.

One can’t simple place two of these six camera heads side by side since each would occulude the other. The solutions so far have been to double the number of cameras with each pair offset for the left and right eye. This works, but increases the diameter of the rig which exacerbates parallax – one of the biggest issues when shooting and stitching 360 video. Parallax was enough of an issue that when shooting 3D 360 video I found it was very difficult to get a clean stitch within about 8-10 feet of the camera. Unfortunately this is the range in which 3D seems to have the most impact, but careful orientation of the cameras to minimize motion across seams does help significantly.

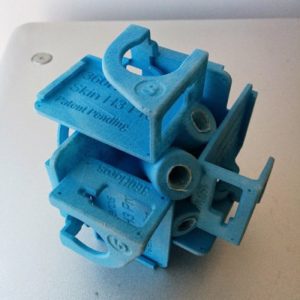

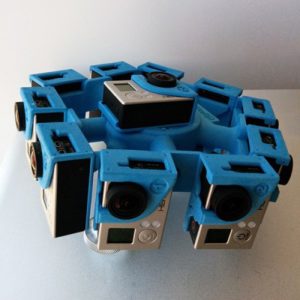

Unless you want to build your own, there are only a handful of 360 video rigs commercially available. Most of these involve holders that carefully orient an array of GoPro cameras. At the time of my testing a small operation out of up state New York called 360 Heros was 3d printing a limited number 360 3D rigs that would hold 12 – 14 cameras. I used a 12-camera rig that shot 3D horizontally, but blended to a 2D image vertically. With this rig interior shots work well, but I regretted not having a 14 camera rig since I was filming outdoor footage where much of the interest was above or slightly below the viewer. In those situations where there is not a defined flat ground plane or ceiling I found the transition from 3D to 2D across the horizontal plane was very noticeable.

Unless you want to build your own, there are only a handful of 360 video rigs commercially available. Most of these involve holders that carefully orient an array of GoPro cameras. At the time of my testing a small operation out of up state New York called 360 Heros was 3d printing a limited number 360 3D rigs that would hold 12 – 14 cameras. I used a 12-camera rig that shot 3D horizontally, but blended to a 2D image vertically. With this rig interior shots work well, but I regretted not having a 14 camera rig since I was filming outdoor footage where much of the interest was above or slightly below the viewer. In those situations where there is not a defined flat ground plane or ceiling I found the transition from 3D to 2D across the horizontal plane was very noticeable.

Working with 12 go pro cameras is another significant challenge. Adjusting the settings, managing the SD cards and footage, and charging batteries for 12 cameras is a monumental task. Fortunately the GoPro iPhone app helps eliminate some of the pain of setting up each camera for shooting 360 video, but be warned – I frequently found that some cameras would reset or the app would not apply the settings to the camera properly. It’s important to double-check everything. I had a few occasions where an entire afternoon of shooting was ruined because one of the 12 cameras was configured incorrectly.

In terms of camera settings I shoot most of my 360 videos at 960 wide at 100 fps on GO PRO Hero 3 Blacks. I have ProTune enabled and white balance turned off. Since the videos will all be stitched into a single file to be played on a low-resolution device, I found it was much better to sacrifice resolution for a higher frame rate. Shooting at 100 fps gives more granularity for synching the videos. Although GoPro includes a remote that lets you start recording on up to 50 cameras with one click of a button, it is inevitable that some cameras start recording at different times. This is particularly problematic with lower quality SD cards or when mixing and matching different makes. When stitching videos with a lot of motion being out of sync by only several milliseconds can create noticeable seams. In addition to sound, the cameras can be synched using camera motion. Before each shot it is imperative to check that all cameras are recording, voice the take number, make a few sharp claps, and spin the head a few times. Later in the process this allows you to synch all 12 videos and the final two 360 panorama pairs when they are multiplexed (muxed) into a single stereoscopic video.

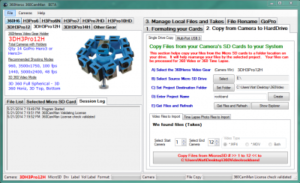

Collecting the footage from so many cameras and organizing those in to folders for each eye for each take used to involve a lot of manual file management and custom Python scripts. Thankfully Michael Kintner, the founder of 360 heroes, has written CamMan.

It’s clunky, but very effective software that automates much of the process of formatting, naming, and collecting videos. It was essential to my process. Once the individual videos are collected there are a few options for stitching the final panos. Again, this used to involve batching thousands of still framed panoramas with programs like PTGui and then generating a video from those files. Some of the earlier 360 videos I made took almost 24 hours to render. Now there are two video stitching packages (Video Pano Pro and Kolor AVP) that speed this process up significantly using the GPU. Video Pano Pro only works with NVidia cards with CUDA support and still requires PTGUI to create a stitch template. The stitch template is where you define the characteristics of the camera and identify similar points across cameras to define how the images are warped and stitched together. Kolor AVP, which I use, also has a full set of tools for synching, stitching, and rendering video panoramas.

It’s clunky, but very effective software that automates much of the process of formatting, naming, and collecting videos. It was essential to my process. Once the individual videos are collected there are a few options for stitching the final panos. Again, this used to involve batching thousands of still framed panoramas with programs like PTGui and then generating a video from those files. Some of the earlier 360 videos I made took almost 24 hours to render. Now there are two video stitching packages (Video Pano Pro and Kolor AVP) that speed this process up significantly using the GPU. Video Pano Pro only works with NVidia cards with CUDA support and still requires PTGUI to create a stitch template. The stitch template is where you define the characteristics of the camera and identify similar points across cameras to define how the images are warped and stitched together. Kolor AVP, which I use, also has a full set of tools for synching, stitching, and rendering video panoramas.

This process is well documented on their site so there is no need get into those details here, but the basic approach is to generate two videos – one for each eye. It’s important to make sure your stitch templates share the same orientation and rotation. My process is to start on one eye and then copy that template to the other eye and then adjust the control points as needed.

This process is well documented on their site so there is no need get into those details here, but the basic approach is to generate two videos – one for each eye. It’s important to make sure your stitch templates share the same orientation and rotation. My process is to start on one eye and then copy that template to the other eye and then adjust the control points as needed.

So coming out of AVP I would have two video panos for the left and right eye both 2300 x 1150 and still at 100fps. I then took these two video panos and stacked them one on top of the other in After Effects and used the audio track to make sure they were perfectly synched up. This is where a couple of sharp claps during recording are very helpful.

So coming out of AVP I would have two video panos for the left and right eye both 2300 x 1150 and still at 100fps. I then took these two video panos and stacked them one on top of the other in After Effects and used the audio track to make sure they were perfectly synched up. This is where a couple of sharp claps during recording are very helpful.

Finally, there is a filter for after effects called quickS3d http://www.inv3.com/quicks3d that gives you fine control in aligning the two videos, adjusting the convergence, rotation and key-stoning to yield a more precisely muxed file. After all the adjustments are made the final step is to export a 2300×2300 video at 30fps. Kolor also ships a free player that makes testing 3d video on a VR headset relatively painless.

So what does the end result of this process look like? Stereo VR video is incredibly immersive. One is transported in time and space, but even small mistakes show up in a big way. Errors in stitching or camera settings are magnified as flaws in the fabric of reality.

The fixed IPD can also be a problem for some. Our eyes each receive a slightly different view of our environment and it’s that offset which creates the feeling of depth that comes from 3D. In rendered VR, the image that each of your eyes receive comes from a CG image generated by virtual cameras or in the case of video from two physical cameras. When the distance between those two cameras does not match one’s natural IPD it can be very nauseating for some viewers. CG rendered environments allow for the flexibility of the user to adjust the IPD to match their eyes, but with video that value is fixed to the distance between cameras at the time of shooting.

Additionally, the 3d rig does not model the natural movement of our eye balls in space. As we turn our head and look around our neck and eye movement differs significantly from the constraints of the camera rig that effectively places our eye balls on the surface of a 14” spherical swivel fixed in space. For this reason and others 360 3D video tends to strain the viewer. VR video is also a totally passive experience and there is little interaction beyond looking around. The user cannot move through, lean forward, or explore the environment in any way. They are stuck on a linear path and locked the physical space of the camera during recording.

But even with these limitations immersive 3D VR video is a breakthrough in our ability to record reality. I like to think of this in context of the early days of film and imagine the rapid improvements in the quality of the hardware and techniques to come. The current problems with parallax, the limitations of resolution, and the fixed un-naturally gimbaled experience are surely only small hurdles in that process. And for all the technical challenges, I think the most interesting problem is what we will craft using this technology? What lies beyond the easy and obvious thrills of the titillating and extreme?